Learn how intelligent Engineering can increase productivity

Introduction

Expedition in a nut-shell

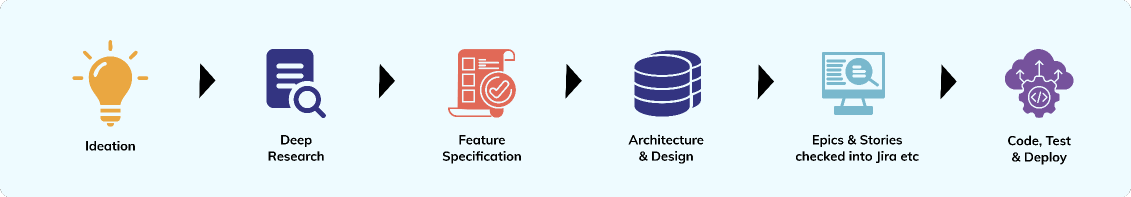

Everyone talks about coding assistants as the big breakthrough in software delivery. However, writing code represents merely a fraction of this journey, accounting for only 10-20% of the total effort involved in software development.

Delivering good software has always involved more—starting with (the what) – researching the idea, defining and refining the product with user groups, requirements analysis, then moving on to planning and design (the how), then development and testing (the build), and finally deployment and maintenance (the implementation). Each of these activities requires different skills, processes, and tools—and each can now be meaningfully enhanced with modern AI. Whether it ’s uncovering insights during analysis, accelerating design decisions, boosting developer productivity, automating tests, or making deployment more resilient, AI is playing the role of a true end-to-end partner.

While the fundamental software development lifecycle (SDLC) — be it through the use of waterfall or agile methodologies—remains intact, the way organizations execute within it is ready for transformation. The opportunity is not about replacing the cycle, but about reimagining it with AI to unlock faster innovation cycles and smarter, more resilient product delivery. This is at the heart of what our intelligent Engineering approach delivers.

Greg Reiser, Head of Client

Partnerships, Americas talks

about applying AI across the

entire SDLC, adopting a

collaborative approach

over delegation, and using

AI to transform

organizational roles and

processes for better

innovation and efficiency.

Highlights

HighlightsHighlights

AI assistance in every phase of the SDLC — research, analysis, design, build, test, deploy, and feedback — significantly unlocks efficiency by cutting time spent on administrative tasks and empowering teams to focus on higher-value work like strategy, creativity, and informed decision-making. While product teams are struggling with Forming and Storming* (Callout to the Tuckman Model) with AI, the teams that have crossed the chasm into the Norming and Performing stages are seeing significant value additions in two key areas:

Codification of Engineering Culture

While countless artefacts exist in every organisation that recommend, suggest or prescribe everything from the ways of working to hard engineering templates, artefacts and patterns, these usually lie dormant and underused and under-subscribed. Shaping the AI to embrace and work your way requires codification of the product engineering culture by feeding it artefacts such as architectural and coding guidelines and other templates. These artefacts act as the AI’s context ensuring ongoing alignment with the organization’s product engineering culture.

This process of providing context to the AI makes onboarding any new team member(s) significantly easier and drives established patterns through, leading to more consistent architecture, higher quality documentation that evolves with the codebase and improved developer experience through context-aware assistance.

This codified context not only helps the AI, but also the human component by leveraging these established guidelines for gentle reinforcements of automatic review through the SDLC.

Realising the promise of efficiency and productivity

As Agentic AI workflows transform the entire software development lifecycle for effective teams in the advanced stages of Norming and Performing, teams are able to see more than 50% of the specifications, stories, code, tests, implementation done by AI but verified by developers delivering significantly increased productivity per engineer on the team. Leverage AI through each of the following phases:

Introduction

Introduction Relevancy

RelevancyRelevancy

How would you answer these questions?

If you’ve answered yes to multiple questions, then you should be considering integrating AI into your product engineering & delivery process. Each of these challenges listed above are interlinked and can be classified into a simple matrix for determining applicability of AI in the SDLC process.

Highlights

Highlights Preparation

PreparationPreparation

Selecting the right project to leverage AI in the SDLC requires careful preparation to in-terms of project and team context so that the journey has least blind spots and desired impact.

Select the right project(s)

Choosing the right candidate project is the foundation of a successful pilot. The project should be meaningful enough to demonstrate tangible impact but not so mission-critical that failure carries high risk. Having the right safety nets in place, such as test environments, monitoring, and rollback options and ensuring detailed AI assisted code reviews, especially as the team’s established the practice, ensures the team can experiment confidently without jeopardizing business continuity.

Build the Right team(s)

A strong, stable product team with shared context is essential to ensure smooth adoption of AI tools. Teams that deeply understand both the product and its business drivers can make better use of AI augmentation. Including a few seed individuals with prior experience in AI or automation further strengthens the team, as they can also establish, codify and provide mentoring on currently relevant good practices and hygiene.

Start with the right level of challenge for the AI for your context

Target one step of the SDLC or everything all at once for a contained problem statement. Start with what makes sense for your context.

In case of brownfield – Focus AI on the SDLC stage with the most friction—such as backlog analysis, testing, or deployment—to deliver early wins, remove bottlenecks, and demonstrate clear value.

In case of greenfield – target the entire SDLC from researching, understanding and solutioning to shaping the solution building, testing, deploying and maintaining it.

Define Success Criteria

Clear & measurable success criteria ensure that adoption of AI delivers measurable outcomes. These metrics should reflect both efficiency gains—such as reduced cycle times, faster recovery, or lower costs—and quality improvements like higher resilience, better accuracy, or tighter alignment to requirements. Well-defined success measures create a shared understanding of what “good” looks like and provide evidence for scaling the transformation.

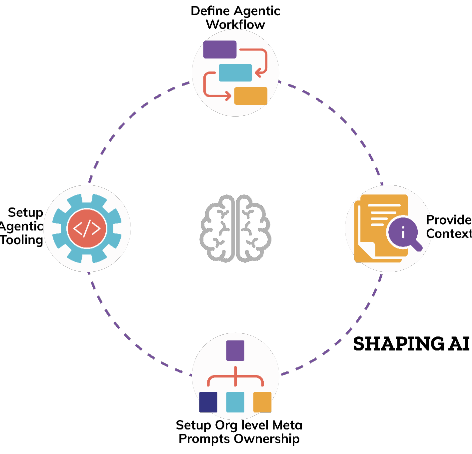

Shape the AI

Start by Shaping the AI to your organisational or team needs. This entails a 4 step process.

1. Creating your Agentic Workflow that typically covers Explore, Plan, Test & Implement phase

2. Provide the AI organisational business, operational and technical context

3. Setup organisational and team level prompting structure and guidelines

4. Selecting the tools ecosystem and connecting it to internal and external systems

Pro-tips for managing ideas

Early Stages

During early stages focus on “leading” indicators: developer sentiment, tool usage, and workflow metrics.

Survey

Conduct developer surveys and track AI usage statistics (active users, acceptance rates) as GitHub recommends.

Align

Use DORA‑style metrics to ensure speedups don’ t sacrifice quality. Align these KPIs to business outcomes (e.g. shorter time-to-market, fewer critical bugs).

Measure

Set “clear, measurable goals” for AI use and monitor both productivity and code quality over time

Relevancy

Relevancy Itinerary

ItineraryItinerary

While plenty of literature will point towards Vibe Coding being the next wave of software engineering, it’s the journey from Vibe coding to Viable coding that will help teams unlock the potential of AI in software engineering.

Adopting AI in software development requires a layered approach: organizations provide foundational guidelines, tool choices, and ecosystem patterns, while teams retain autonomy to adapt these to their specific context.

Sahaj’s intelligent Engineering framework isn’t meant to be prescriptive. It represents a collection of proven techniques and sensible defaults to be used as a guideline and adapted to your organisational needs.

The intelligent Engineering Framework comprises two interlinked phases, Shaping the AI & Leading the AI, with a continuous learning and refining loop completing the connection.

Phase 1, Shaping the AI, consists of Setting up the Workflow, Context & Prompt Engineering and Tooling at an organisational level while providing teams the flexibility to adopt and adapt and then execute on their adapted workflow. Start by Shaping the AI to your organisational or team needs.

Phase 2 is about Leading the AI through the implementation and includes the following steps:

Before you embark on the journey, there are a few patterns that you need to consider. The journey to leveraging AI will involve learning new skills and behaviours and will initially be a disruptive change to the existing processes. Below are some behaviours to expect while going through the process:

RESTART

RESTART